Add RabbitMQ and go-cron to your DigitalOcean droplet [Part 1]

Table of Contents

Disclosure #

A modern web application in 2022 uses dozens of third party services. These services simplify application development so much that it makes web development almost too easy.

This is even more apparent for distributed systems. Using different services of your cloud provider allows you to focus on developing the core components that are more important for the business.

For example, instead of configuring message brokers on Linux servers, you can use a “Queue as a Service” solution. Do you want to run scheduled tasks? Don’t bother setting up cron workers on your servers, just use a “Function as a Service” solution.

If you are working in a high traffic environment where downtime can not be tolerated, this is totally reasonable. Instead of a DIY solution, just delegate the liability to a third party service.

So why bother? #

In this article, I will walk through a VPS configuration. The main focus of the article will be on queues(rabbitMQ) and cron-jobs(go-cron).

I am writing this article for two main reasons. If you are here due to a similar curiosity, this article is for you regardless of your level.

- To be able to appreciate how things were done back in the day.

- Develop a practical understanding of how these queues and cron-workers can be implemented in a web server.

You hear stories from the industry veterans. Imagine you started working at a new company. You’ve been assigned to set up or maintain an application that is deployed on an on-premises server. The configuration is massive. Maybe there are multiple RDBMSes running on the same machine. There are cron-workers run overnight to handle some tasks.

Secondly, I think it is very difficult to operate in an environment where you do not possess an understanding of how different components operate at a lower level. This reminds me of the movie “Ford v Ferrari”, the driver/mechanic Ken Miles’ story was very impressive. You might hear that many other racing drivers were also good mechanics (i.e, Michael Schumacher).

Why DigitalOcean? #

For this exercise, I decided to use a DigitalOcean droplet instead of the popular AWS EC2. DO droplets are really easy to set up. I also find DO Dashboard very intuitive.

You can use their most basic plan with an up-to-date Ubuntu distribution and deploy to the closest data centre. If you get stuck, you can find really good articles on their blog.

Assumptions #

To make this exercise a bit more interactive, I decided to deploy a RESTful API that handles HTTP requests that trigger the enqueue process. The application runs as a systemd service in the background and restarts if the server boots. You can find the repo here (note that this application uses Postgreql. If you want to use this application, you would need to simplify it or code up your own)

Building the RESTful API is not a compulsory step for this exercise and I will not be covering how to build or how to deploy this application to our VPS.

We will keep things simple and stupid. We will be using hard-coded values in our messages and emails. The purpose of this exercise is to replicate a workflow, not the outcome.

Final VPS configuration #

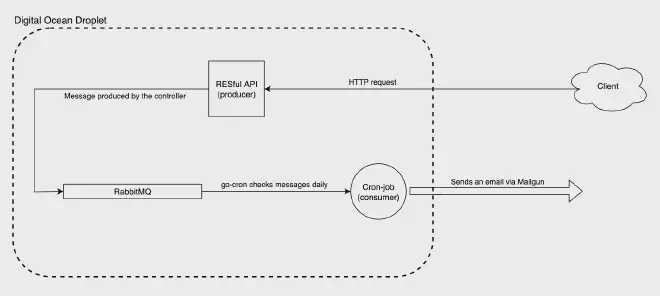

This diagram shows the final state of my VPS.

In the next chapter, Part 1 we will be on setting up the RabbitMQ and adding enqueue functionality to our app.

After we confirm that our queue holds the messages that are enqueued by our app, we will move to Part 2. Here we will build a Go application that consumes the messages. We will keep the application running as a systemd service. The consumer app will use clockwork functionality(using go-cron library) and check the message queue every day(or hour depending on your choice) and send emails.

For the email delivery service, we will use Mailgun’s Go client library.